When we first started The Next Platform a decade ago, there was not really much of a reason to cover the company’s datacenter efforts. But, we are a hopeful people here at what was originally called The Platform, and from the get-go we knew that the market needed competition for high performance CPUs and GPUs and that AMD had the will and would find a way to compete with the likes of Intel and Nvidia in the markets that they ruled.

No one could have predicted how fast Nvidia would have expanded in the wake of GenAI, and no one would have predicted how far – and how fast – Intel has fallen.

And for those of you who believed that the Number Two vendor can work harder and can succeed, as we did, well, we all have the satisfaction of being right. Which is a beautiful thing, ain’t it? But what is truly a thing to behold – and what is far more important than being right, after all – is how far AMD has come in a decade and to ponder what AMD might do in the next decade as computing inevitably evolves in the datacenter and in our handheld devices.

It is with that broad expanse in mind that we contemplate the financial results that AMD just turned in for 2024. The company is humming along on all fronts, but its numbers also show that it is still incredibly expensive to create the high end PC and server devices that the company proudly crafts and peddles, and therefore it is always a challenge to rake in the profits when it believes in open source software and cannot make a moat like other companies in the history of IT have been able to do – like IBM and Nvidia, to name two who are still doing it largely because of the proprietary and closed source nature of their software stacks.

In the first year that this publication existed, AMD had $3.99 billion in revenues, and we estimate that only about $100 million of that was for datacenter products – mostly for Opteron processors for industrial and embedded use cases. But that year, 2015, was pivotal in that AMD was determined to get back into X86 CPUs for servers and laid the groundwork for the Epyc processors, which are the highest performance X86 CPUs on the market and, at list price anyway, yield the best bang for the buck on many metrics.

That didn’t happen overnight, but in stages, with five generations of Epyc processors, each getting better with the ticking of each generation, and Ryzen CPUs for PCs have used much of the same technology to take on Intel’s hegemony on the desktop and in the laptop, too.

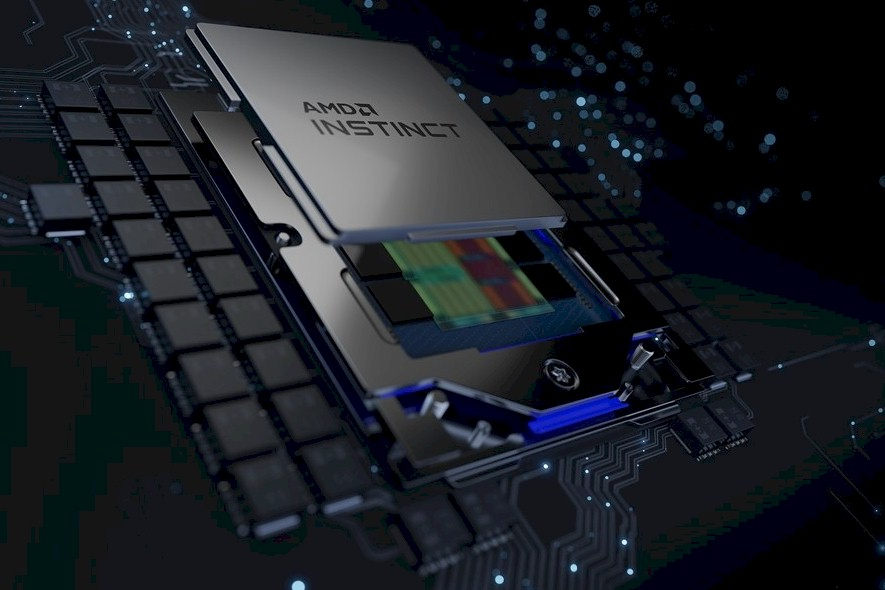

And so, in 2024, by comparison, AMD posted a record $25.79 billion in sales, up 13.7 percent, with net income of $1.62 billion, up by a factor of 1.9X but only representing 6.4 percent of revenues. Perhaps more importantly, last year the datacenter business at AMD, which includes Epyc CPUs, Instinct GPUs, Pensando DPUs, and Xilinx FPGA accelerators, accounted for $12.58 billion in sales, or 48.8 percent of the revenues. In both Q3 and Q4 of 2024, datacenter drove in excess of half of AMD’s revenues and nearly 60 percent of its operating income.

It has been a decade of tremendous effort on the part of AMD and its key partners – notably its foundry, Taiwan Semiconductor Manufacturing Co, but also including the hyperscalers and cloud builders who are always looking for cheaper compute and more of it.

That gives you the broad strokes over the decade. But let’s drill down into the fourth quarter and see what 2025 is looking like.

In the December quarter, AMD’s overall revenues were up 24.2 percent to $7.66 billion, but net income was down 27.7 percent to $482 million. That net income was a mere 6.3 percent of profit, and was not as good as the year ago period or the prior quarter because AMD cashed in some tax benefits earlier in 2024 and also did not have to make such huge investments in its future GPU roadmap even a year ago as it is doing now.

It takes money to make money, as you well know. And many of the investments that AMD makes in process and packaging technologies are done at the corporate level and do not directly impact the operating income of the individual units. (This is an accounting choice.)

In Q4 2024, AMD’s datacenter business raked in $3.86 billion, up 69.1 percent year on year and up 8.7 percent sequentially. The Data Center group had $1.16 billion in operating income, up 73.7 percent year on year. This operating margin was 30 percent of revenues, which is more than ten points higher than the rate that AMD’s Client group brings in for laptop and desktop chips, which is twice that of gaming GPUs.

The Embedded group, which is mostly Xilinx FPGAs but also custom game console processors and graphics cards, had $923 million in sales, down 12.7 percent, with operating income of $362 million, down 21.5 percent. But, this FPGA and embedded compute business consistently delivered 40 percent operating margins last year, which makes it the most profitable part of AMD.

What everyone has been watching like a hawk since this time last year is how AMD’s Instinct GPU business is growing each quarter and what the annual revenue stream would be for 2024.

Here is the final iteration of this table, which shows that AMD did indeed break just above $5 billion in Instinct GPU sales as we started predicting many months ago as AMD kept raising its forecast, which started at a more conservative “more than $2 billion” this time last year. Take a gander:

What AMD chief executive officer, Lisa Su, and AMD chief financial officer, Jean Hu, did not do was offer specific guidance for GPU sales for the first quarter of 2025 or the full year. But the Su and Hu did give Wall Street a few hints on how to make their models, and we took the hints, too.

It doesn’t sound like AMD will be growing that Instinct GPU business by 2X in 2025, which we are sure many investors were hoping it might. It could still happen, and Su did say that this part of the business would be worth “tens of billions of dollars of annual revenue over the coming years,” and we agree. But probably not in 2025. As we have been saying, Nvidia has access to more HBM memory from Samsung, SK Hynix, and Micron Technology and more CoWoS interposer technology for lashing it to GPU chips from TSMC, and that, more than any other factor, determines how many Instinct GPUs AMD can make. We are sure that it could sell 10X more devices if it could get on enough HBM and CoWoS to manufacture Instinct cards.

Based on what Su and Hu said on the call with Wall Street, we reckon that AMD sold $1.98 billion in Epyc CPUs in Q4, up 12 percent from the year ago period and up 12.5 percent sequentially. The server CPU upgrade cycle is building some momentum, for AMD at least if not for Intel, which had to cut prices on its “Granite Rapids” Xeon 6 processors by as much as 30 percent recently to better compete with AMD’s prior generation “Genoa” Epyc 9004 series CPUs. Sales of the Genoa CPUs are still rising, and the “Turin” Epyc 9005s launched as 2024 came to a close are ramping as expected, according to Su.

We think that Instinct GPU sales were up by 4.2X year on year to $1.75 billion, which was only a 6 percent rise sequentially from Q3 2024. This number fits what Su said: Datacenter was up about 9 points sequentially and CPUs were up a little more and GPUs were up a little less.

That leaves $130 million for DPUs and FPGAs in the datacenter, which is still noise in the data.

Just for fun, we took a stab at projecting annual Epyc CPU and Instinct GPU revenues. This is for the part of the two lines in the chart above that are to the right of the vertical red line.

Epyc CPUs saw 25.7 percent growth to $7.04 billion in 2024, and we think it will slow a bit in 2025 but still grow at “strong double digits” which we take to mean above 20 percent. We called it 21 percent on average for the year, with weaker growth in Q1 and stronger growth in Q4.

We believe that GPU sales will closely track CPU sales at AMD going forward – and not for any causal reason. We think somewhere around 68 percent growth is a good baseline, which is a remarkable deceleration for a business that grew by a factor of 7.6X last year, especially considering the explosive demand that Nvidia is seeing for its GPUs. But again, we think the gating factor is HBM and CoWoS, and AMD can’t grow any faster than its partners can ramp up supply.

Anyway, if you do the math on that, our first pass prediction for Instinct GPU sales in 2025 is $8.44 billion, nearly the same as the $8.52 billion we are projecting for Epyc CPU sales. These are essentially a statistical tie at this point – and again, the numbers are just working out that way and there is not a causal link. The year is still young, and we shall see how accurate this prediction, which is based on gut as much as hints. Even AMD doesn’t really know at this point.

Su said on the call that AMD began volume production of the Instinct MI325X GPU accelerator in the fourth quarter, which is based on the “Antares” GPU used in the existing MI300A and MI300X GPU accelerators. The MI325X has 256 GB of HBM3E memory with 6 TB/sec of bandwidth on its package but which has the same raw, mixed precision compute performance as the MI300X, which only has 192 GB of HBM3 memory with 5.3 TB/sec of bandwidth. The MI325X is aimed at the Nvidia “Hopper” H200, which has only 141 GB of HBM3E memory with 4.8 TB/sec of bandwidth.

Of course, Nvidia has its “Blackwell” B100 and B200 accelerators, announced nearly a year ago, and the B300s with even fatter memory are on the way, so AMD is pulling in the MI355X from “some time in the second half of 2025” into “mid year” to better compete against Nvidia Blackwells.

The MI350 series, of which the MI355X is but one member, is based on a new CDNA 4 architecture that will deliver 1.8X the performance of the MI325X, which is 2.3 petaflops at FP16 precision and 4.6 petaflops at FP8 precision, and 9.2 petaflops at FP6 or FP4 precision. The CDNA 4 architecture is the first from AMD to deliver FP6 and FP4 low precision floating point support. The MI355X has 288 GB of HBM3E memory and 8 TB/sec of bandwidth out of it. (This is without sparsity support on.)

The Blackwell B200 from Nvidia has 192 GB of HBM3E memory and 8 TB/sec of bandwidth. Without sparsity support, the B200 is rated at 9 petaflops at FP4 precision and 4.5 petaflops at FP8 precision – essentially neck and neck in terms of raw performance and with less HBM memory than the AMD alternative.

You can see why AMD moved the CDNA 4 architecture forward from the MI400 series of GPUs, and is racing to get the MI355X into the field.

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.